KI DESIGN NATIONAL ELECTION SOCIAL MEDIA MONITORING PLAYBOOK — PART IV of V

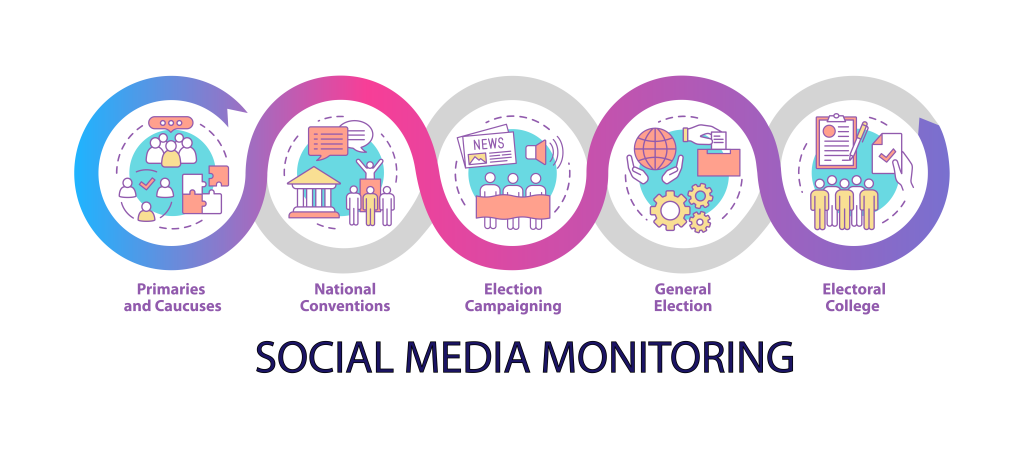

Monitoring Online Discourse During An Election: A 5-Part Series How to monitor social media with AI-based tools during an election campaign Traditional election monitoring is a formalized process in democratic countries, set out in the mandate of the national Electoral Management Body (EMB). As social and digital cultures change, however, EMBs are finding it useful…

Wael Hassan

MANAGING OPERATIONAL ISSUES DURING AN ELECTION — PART III of V

Monitoring Online Discourse During An Election: A 5-Part Series The advantages of managing election logistical issues through social media. Organizing the logistics of an election is a complex process. It’s a question of scale; the sheer numbers involved – of voters, of polling options and locations, and of election materials – means that things can,…

Wael Hassan

IDENTIFYING DISINFORMATION — PART II of V

Monitoring Online Discourse During An Election: A 5-Part Series Using AI to track disinformation during an election campaign. How can online disinformation be identified and tracked? KI Design provided social media monitoring solutions for the 2019 Canadian federal election.[1] KI Social is a suite of tools designed to support three main areas of Electoral Management…

Wael Hassan

How do I permanently delete my facebook account?

Whereas there is a facebook page that makes people believe that their account was deleted, information in actual fact is never deleted. Whats their excuse, someone else may have liked your picture or article. From facebook pages: If you don’t think you’ll use Facebook again, you can request to have your account permanently deleted. Please…

Wael Hassan

The 4 steps you should take before engaging with Social Media Data

Organizations are using big data to help consumers. The question is what steps organizations can take to avoid inadvertently harming consumers. In the event of legal action, an organization’s preparedness is a determining factor in how well the case will move forward. In court matters, the electronically stored information is generally subject to the same…

Wael Hassan

Social Media’s Big Data Collection

To highlight a few areas in which big data has helped to improve the organizational processes, the following are real examples worth mentioning. In education, some institutions have used big data to identify student candidates for advanced classes. In finance, big data has been used to provide access to credit through non-traditional methods, for example,…

Wael Hassan

Overcoming the Challenges of Privacy of Social Media in Canada

In Canada data protection is regulated by both federal and provincial legislation. Organizations and other companies who capture and store personal information are subject to several laws in Canada. In the course of commercial activities, the federal Personal Information Protection and Electronic Documents Act (PIPEDA) became law in 2004. PIPEDA requires organizations to obtain consent…

Wael Hassan

A Lesson to Know: The Unforgiving Culture of Social Media

For better or for worse, for decades public figures ranging from celebrities to CEOs to politicians to athletes have been notoriously remembered for 10-or-15-second snippets of speech endlessly repeated as quotes in newspapers, snippets in television commercials or on news reports until they come to capsulize the person. For example, way back in 2o14, Elon…

Wael Hassan

Social Media as Political Warfare

The rise of social media has immeasurable power. Whereas in the past, people would get their recent news updates from television or the radio, now it is regular, lay people (often in 140 characters or less) spreading the news. While sharing opinion on social media outlets has the power to liberate and empower people, the…

Wael Hassan

Building a Social Media Pipeline

Companies who developed Success Criteria, established their Style, decided their Sources, Setup a business process, whilst they survey their results are winning big on social media. The most unknown part of building an enterprise social media service is how to build a social media pipeline. This presentation describes how to do that. Building a Social…

Wael Hassan

Why Social Media Matters

As the global uptake of social media continues to climb, a new outlet to target a wide range of demographics has emerged. While traditional forms of media such as print advertisements, billboards and networking have remained prominent in the past, use of social media as a form of marketing has exponentially increased as more users…

Wael Hassan

Top 3 Tweets on Ransomware

During the last three days we collected 461,325 tweets. The INTERNET clearly thinks that NSA enabled the ransomware attack and that it will happen again: 2056 RT @Snowden: When @NSAGov enabled ransomware eats the internet, help comes from researchers, not spy agencies. Amazing story. Click Here 1191 RT @chemaalonso: El lado del mal – El…

Wael Hassan