Seizing the Opportunities of Big Data

A good place to start with data analytics is to subscribe to reports on data trends, or to a trends-based web service. These services, such as Microsoft Insights, can provide valuable research data relevant to your business operations. Big data allows researchers to collect and analyze various industry trends and patterns, draw conclusions, and publish…

Disinformation is polluting democracy

Disinformation campaigns and the future of elections United States citizens are still recovering from the aftermath of the election. KI Design’s media monitoring data shows that 39% of online election sentiment is negative. Voters have experienced sustained disinformation campaigns that have compromised the electoral system and undermining public confidence in democratic processes. Disinformation – false…

Using AI to Combat AI-Generated Disinformation

AI can be impact election outcomes? how can this be combatted?

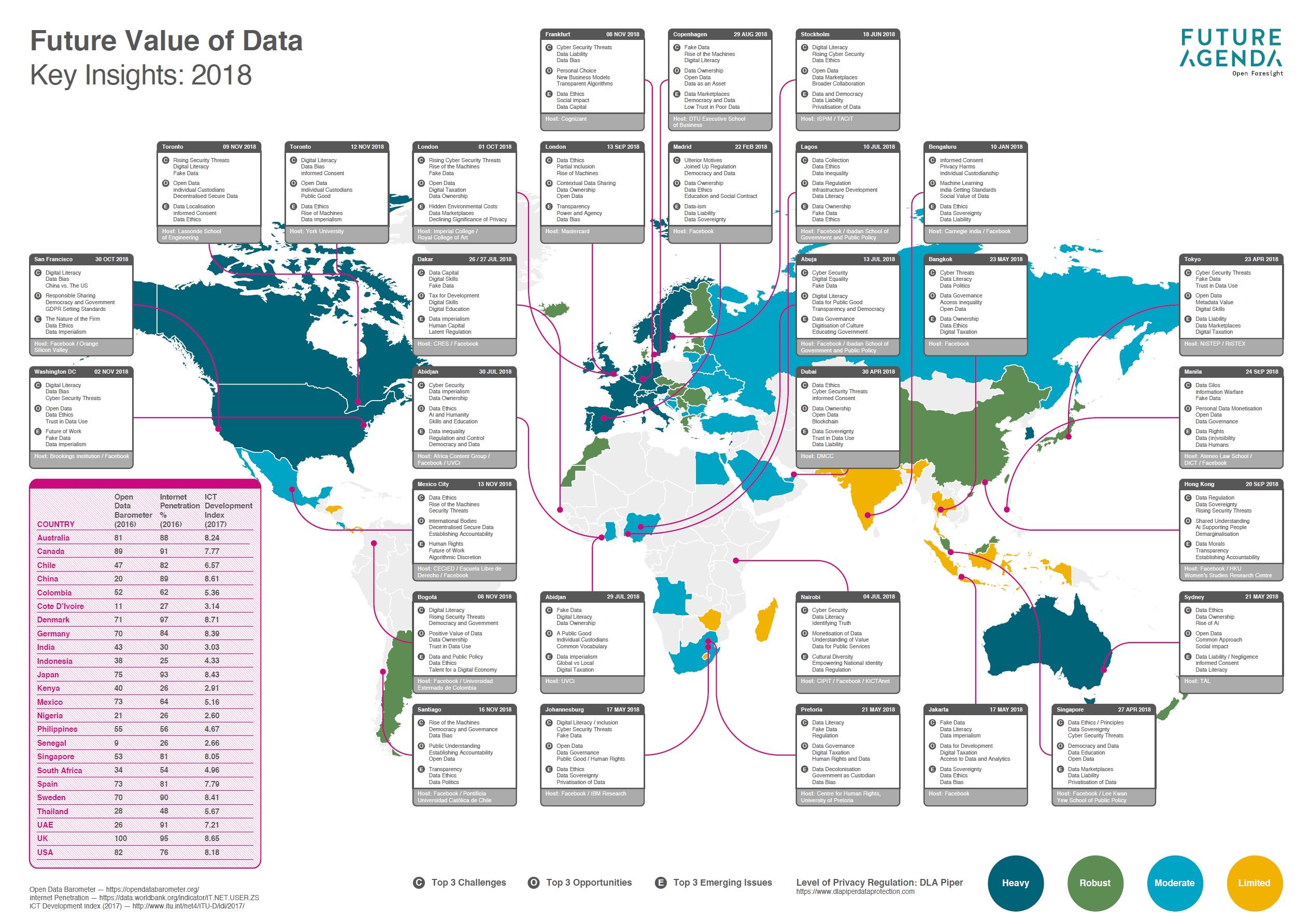

Future of Data

Infographic representing key issues concerning future of data broken by country. Link to source: Future of Data

Urban Data Responsibility – The Battle for TorontotechUrban Data Responsibility – The Battle for Toronto

The initial excitement over Alphabet’s SmartCity may be dwindling out of the perception that the tech giant will use the new development in the Harbourfront to collect personal data. The special attention given by interest groups to a project that actually has engaged the public and shown good faith may be giving companies the wrong lesson: Don’t…

Are Malls “Grasping at Straws”?

Cadillac Fairview is tracking the public by using facial recognition technology !! The news of privacy commissioners of Canada and Alberta launching an investigation into facial recognition technology used at Cadillac Fairview, did not come as a surprise to many. The investigation was initiated by Commissioner Daniel Therrien in the wake of numerous media reports that…

Artificial Intelligence and Privacy: What About?

Inference How AI impacts privacy and security implementaiton? Big Data analytics is transforming all industries including healthcare-based research and innovation, offering tremendous potential to organizations able to leverage their data assets. However, as a new species of data – massive in volume, velocity, variability, and variety – Big Data also creates the challenge of compliance…

Can big Data be wrong – An election post mortem

Well that’s a good question, everyone is asking today what happened with the elections. Thinking that all that we knew and heard from media outlets was wrong. Big Data is subject to a few simple rules which often get ignored. When the next election or event comes along, there is one thing to remember. Big Data has…