Series: Bringing Privacy Regulation into an AI World

Over the past decade, privacy has become an increasing concern for the public as data analytics have expanded exponentially in scope. Big data has become a part of our everyday lives in ways that most people are not fully aware of and don’t understand.

Moving from Access Control to Use Control

Bringing Privacy Regulation into an AI World, Part 5 I would like to outline a new concept of use control: a way of designing AI systems so that personal data can be used only for specified purposes.

Is AI Compatible with Privacy Principles?

Bringing Privacy Regulation into an AI World, Part 2 Many experts on privacy and artificial intelligence (AI) have questioned whether AI technologies such as machine learning, predictive analytics, and deep learning are compatible with basic privacy principles.

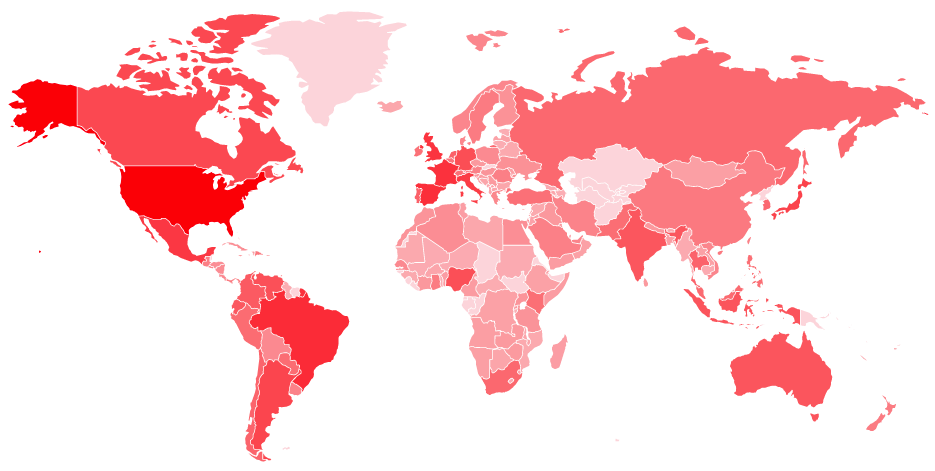

Police use of AI-based facial recognition – Privacy threats and opportunities !!

This article describes the issue of Police use of AI-based facial recognition technology, discusses why it poses a problem, describes the methodology of assessment, and proposes a solution The CBC reported on March 3[1] that the federal privacy watchdog in Canada and three of its provincial counterparts will jointly investigate police use of facial-recognition technology…

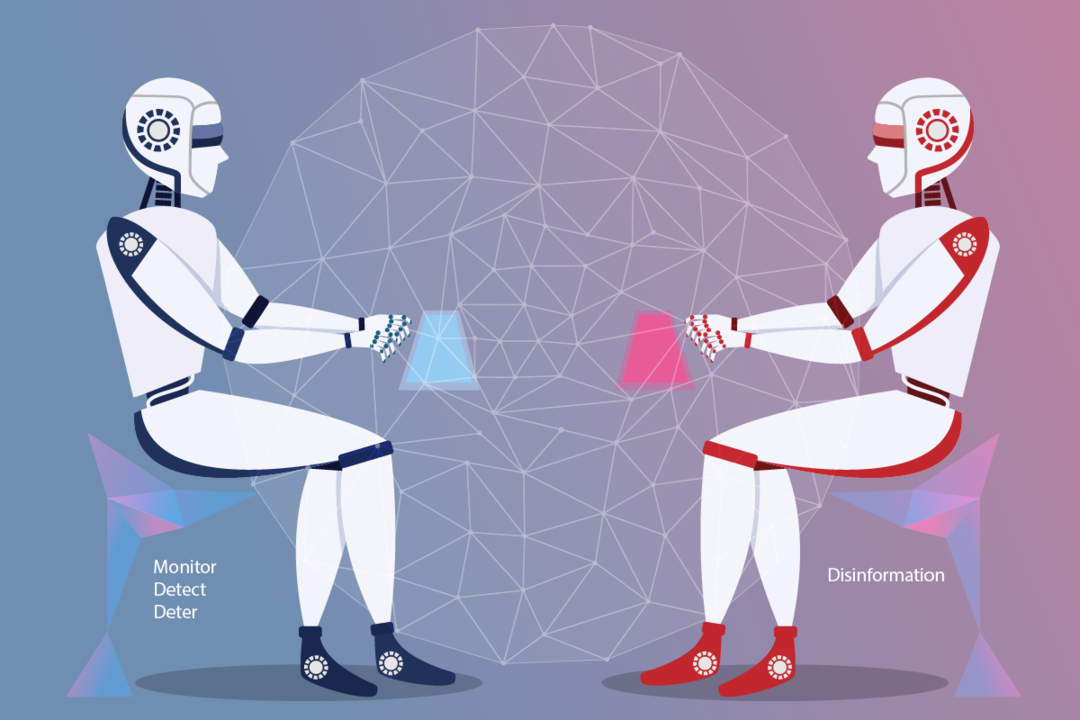

Using AI to Combat AI-Generated Disinformation

AI can be impact election outcomes? how can this be combatted?

Smart Privacy Auditing – An Ontario Healthcare Case Study

IPCS Smart Privacy Auditing Seminar On September 13, Dr. Waël Hassan, was a panelist at the Innovation Procurement Case Study Seminar on Smart Privacy Auditing, hosted by Mackenzie Innovation Institute (Mi2) and the Ontario Centres of Excellence (OCE). The seminar attracted leaders from the healthcare sector, the private information and technology industry, and privacy authorities.…

Artificial Intelligence and Privacy: What About?

Inference How AI impacts privacy and security implementaiton? Big Data analytics is transforming all industries including healthcare-based research and innovation, offering tremendous potential to organizations able to leverage their data assets. However, as a new species of data – massive in volume, velocity, variability, and variety – Big Data also creates the challenge of compliance…