Seizing the Opportunities of Big Data

A good place to start with data analytics is to subscribe to reports on data trends, or to a trends-based web service. These services, such as Microsoft Insights, can provide valuable research data relevant to your business operations. Big data allows researchers to collect and analyze various industry trends and patterns, draw conclusions, and publish…

Series: Bringing Privacy Regulation into an AI World

Over the past decade, privacy has become an increasing concern for the public as data analytics have expanded exponentially in scope. Big data has become a part of our everyday lives in ways that most people are not fully aware of and don’t understand.

Moving from Access Control to Use Control

Bringing Privacy Regulation into an AI World, Part 5 I would like to outline a new concept of use control: a way of designing AI systems so that personal data can be used only for specified purposes.

Is AI Compatible with Privacy Principles?

Bringing Privacy Regulation into an AI World, Part 2 Many experts on privacy and artificial intelligence (AI) have questioned whether AI technologies such as machine learning, predictive analytics, and deep learning are compatible with basic privacy principles.

A 3D Test for Evaluating COVID Alert: Canada’s Official Coronavirus App

Great news – Canada has just released its free COVID-19 exposure notification app [1], COVID Alert. Several questions now arise: Is it private and secure? Will it be widely adopted? And how effective will it be at slowing the spread of the virus? We have evaluated the COVID alert app against three dimensions: Concept, Implementation,…

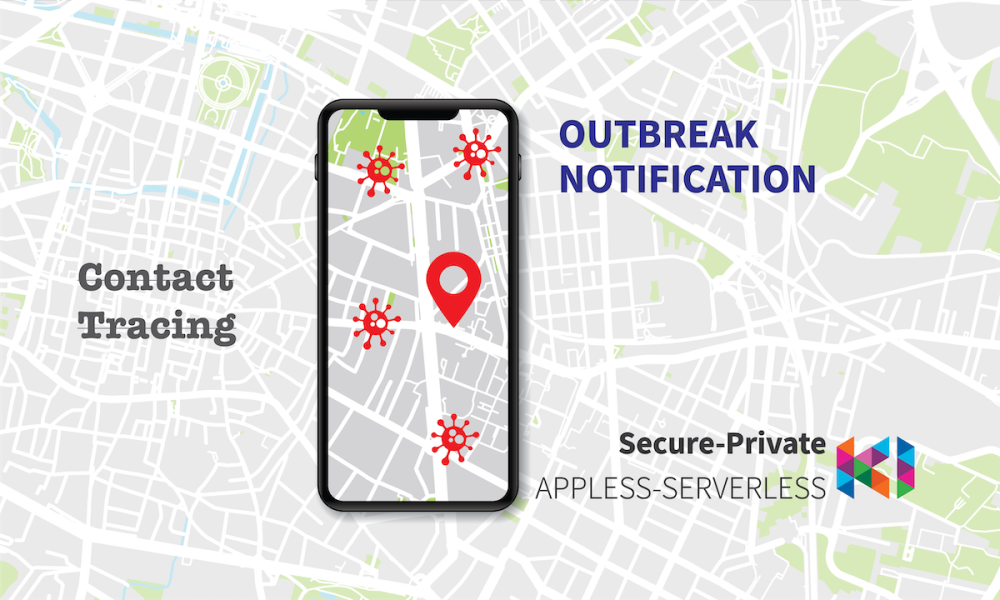

Outbreak Notification App Design

From Contact Tracing to Outbreak Notification Call for Participation As countries assess how best to respond to the COVID-19 pandemic, many have introduced smartphone apps to help identify which users have been infected. These apps vary from country to country. Some are mandatory for citizens to use, such as apps released by the governments of…

Do ‘Contact Tracing Apps’ need a Privacy Test?

The Coronavirus continues to cause serious damage to humanity: loss of life, employment, and economic opportunity. In an effort to restart economic activity, governments at every level, local, regional, and national, have been working on a phased approach to re-opening. However, with re-opening comes a substantial risk of outbreaks. Epidemiological studies are showing that shutdowns have…

Blackbaud breach – Executive Options in light of Reports to OPC & ICO

Three Executive Actions to help mitigate further risk If your company leverages Blackbaud CRM – this article will provide you of three actions that will help mitigate risk. Blackbaud a reputable company that offers a customer relationship management system has been hit and paid off ransomware. According to G2, Blackbaud CRM is a cloud fundraising and…

Police use of AI-based facial recognition – Privacy threats and opportunities !!

This article describes the issue of Police use of AI-based facial recognition technology, discusses why it poses a problem, describes the methodology of assessment, and proposes a solution The CBC reported on March 3[1] that the federal privacy watchdog in Canada and three of its provincial counterparts will jointly investigate police use of facial-recognition technology…

Best-Practice Data Transfers for Canadian Companies – III – Vendor Contracts

PREPARING FOR DATA TRANSFER – CLAUSES FOR VENDOR CONTRACTS A three-part series from KI Design: Part I: Data Outsourcing Part II: Cross-border Data Transfers Part III: Preparing for Data Transfer – Clauses for Vendor Contracts The following guidelines are best-practice recommendations for ensuring that transferred data is processed in compliance with standard regulatory privacy laws. While a…

Best-Practice Data Transfers for Canadian Companies – Part II

CROSS-BORDER DATA TRANSFERS A three-part series from KI Design: Part I: Data Outsourcing , Part II: Cross-border Data Transfers, Part III: Preparing for Data Transfer – Clauses for Vendor Contracts When personal information (PI) is moved across federal or provincial boundaries in the course of commercial activity, it’s considered a cross-border data transfer. Transferring data brings…

Best-Practice Data Transfers for Canadian Companies – I – Outsourcing

DATA OUTSOURCING In our digitally interconnected world, most organizations that handle personal information will transfer it to a third party at some stage of the data life cycle. Your company may send personal information (PI) to an external service provider such as PayPal to process customer payments – that’s a data transfer. Perhaps you hired…